DALL·E 2 – The AI that Learned the Relationship Between Images and Text Used to Describe Them

DALL·E 2 is a new AI system that can create realistic images and art from a description in natural language.

DALL·E 2 can create original, realistic images and art from a

DALL·E 2 can create original, realistic images and art from a

text description. It can combine concepts, attributes, and styles.

TEXT DESCRIPTION

DALL·E 2 can expand images beyond what’s in the original canvas, creating expansive new compositions.

DALL·E 2 can make realistic edits to existing images from a natural language caption. It can add and remove elements while taking shadows, reflections, and textures into account.

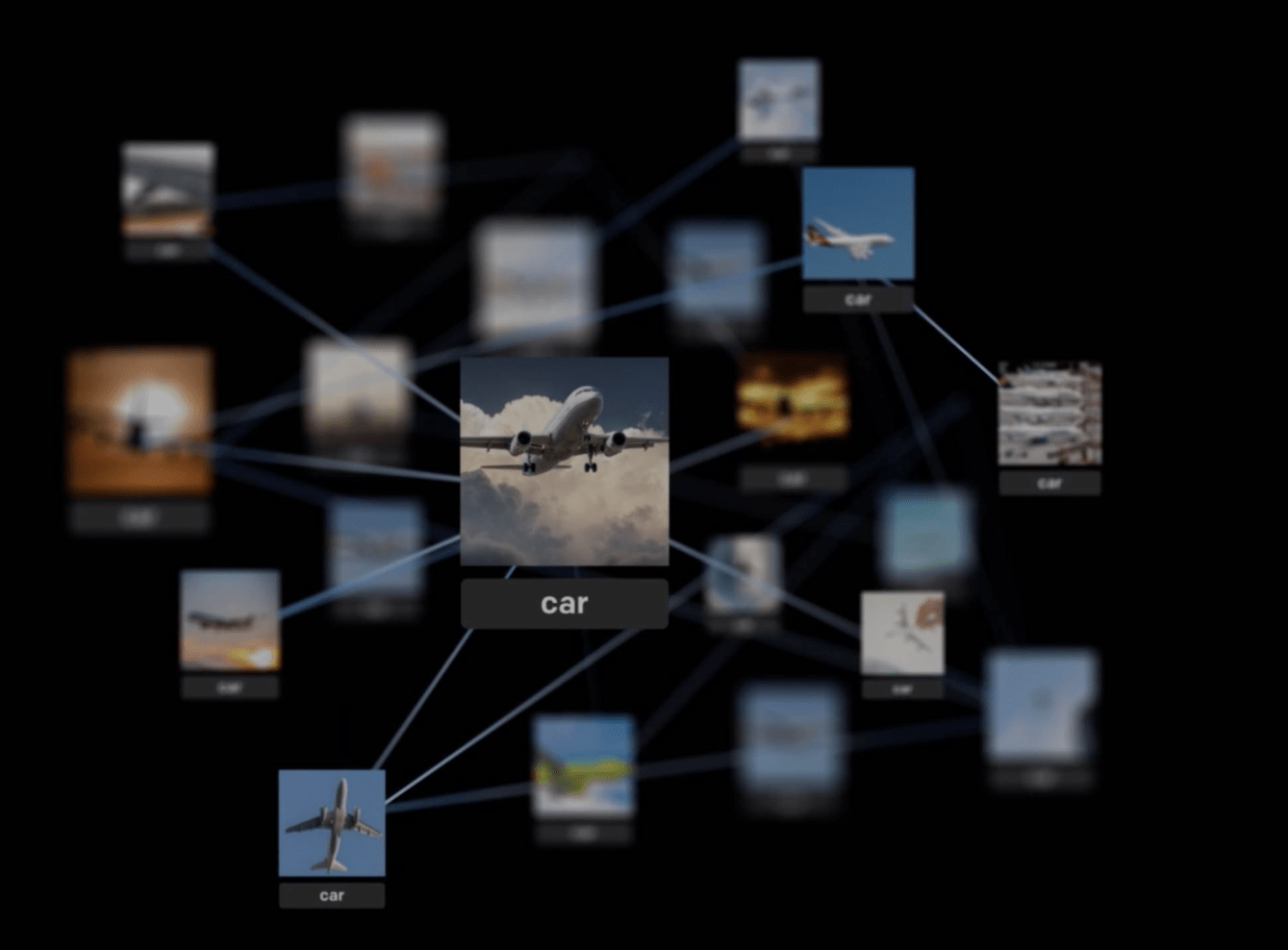

DALL·E 2 can take an image and create different variations of it inspired by the original.

DALL·E 2 has learned the relationship between images and the text used to describe them. It uses a process called “diffusion,” which starts with a pattern of random dots and gradually alters that pattern towards an image when it recognizes specific aspects of that image.

In January 2021, OpenAI introduced DALL·E. One year later, our newest system, DALL·E 2, generates more realistic and accurate images with 4x greater resolution.

DALL·E 2 is preferred over DALL·E 1 for its caption matching and photorealism when evaluators were asked to compare 1,000 image generations from each model.

DALL·E 2 began as a research project and is now available in beta. Safety mitigations we have developed and continue to improve upon include:

We’ve limited the ability for DALL·E 2 to generate violent, hate, or adult images. By removing the most explicit content from the training data, we minimized DALL·E 2’s exposure to these concepts. We also used advanced techniques to prevent photorealistic generations of real individuals’ faces, including those of public figures.

Our content policy does not allow users to generate violent, adult, or political content, among other categories. We won’t generate images if our filters identify text prompts and image uploads that may violate our policies. We also have automated and human monitoring systems to guard against misuse.

Learning from real-world use is an important part of developing and deploying AI responsibly. We began by previewing DALL·E 2 to a limited number of trusted users. As we learned more about the technology’s capabilities and limitations, and gained confidence in our safety systems, we slowly added more users and made DALL·E available in beta in July 2022.

source