NSA ANT Catalog – Access Network Technology – ANT Technology

Advanced Network Technologies (ANT) is a department of the US National Security Agency (NSA), that provides tools for the NSA‘s Tailored Access Operations (TAO) 1 unit and other internal and external clients. With the tools it is possible to eavesdrop on conversations (room bugging), personal computers, networks, video displays, and a lot more, using covertly installed hard- and software implants (covert ware). Most of it is built from commercial off-the-shelf parts (COTS).

Some of these products are listed in the ANT Product Catalogue, an internal NSA document that was intended for the US intelligence and law-enforcement community, and that was disclosed to the press on 29 December 2013 by an unknown source. It is believed that this source is not NSA whistleblower Edward Swowden, which means there is at least one other whistleblower [3][5].

➤ Browse the NSA ANT catalog Here

LOUDAUTO is the codename or cryptonym of a covert listening device (bug), developed around 2007 by the US National Security Agency (NSA) as part of their ANT product portfolio. The device is an audio-based RF retro reflector that should be activited (illuminated) by a strong continuous wave (CW) 1 GHz 1 radio frequency (RF) signal, beamed at it from a nearby listening post (LP).

is the codename or cryptonym of a covert listening device (bug), developed around 2007 by the US National Security Agency (NSA) as part of their ANT product portfolio. The device is an audio-based RF retro reflector that should be activited (illuminated) by a strong continuous wave (CW) 1 GHz 1 radio frequency (RF) signal, beamed at it from a nearby listening post (LP).

Although the device is activated by an external illumination signal, it should also be powered by local 3V DC source – typically provided by two button cells – from which it draws just 15µA. In this respect, it is a semi-passive element (SPE).

Room audio is picked up and amplified by a Knowles miniature microphone, that modulates the re-radiated illumination signal by means of Pulse Position Modulation (PPM). The re-emitted signal is received at the listening post – typically by a CTX-4000 or PHOTOANGLO system – and further processed by means of COTS equipment.

LOUDAUTO is part of the ANGRYNEIGHBOR family of radar retro-reflectors. In this context, the term radar refers to the continuous wave activation beam from the listening post, that operates in the 1-2 GHz frequency band. The processing and demodulation of the returned signal is typically done by means of a commercial spectrum analyser, such as the Rohde & Schwarz FSH-series, that has been enhanced with FM demodulating capabilities. In many respects, LOUDAUTO can be seen as a further development of the CIA‘s EASY CHAIR passive elements, combined with later active bugging devices, like the SRT-52 and SRT-56, which also used Pulse Position Modulation (PPM).

Information about LOUDAUTO was first published in an internal Top Secret (TS) NSA document on 1 August 2007, that was available to the so-called five eyes countries (FVEY) 2 only. Although it was scheduled for declassification on 1 August 2032 (25 years after its inception), it was revealed to the public on 29 December 2013 by the German magazine Der Spiegel. The source of this leak is still unknown. 3 According to a product datasheet of 7 April 2009, the price of a single LOUDAUTO device was just US$ 30. According to that document, the end processing — presumably the demodulation — was still under development in 2009 [1].

FiREWALK

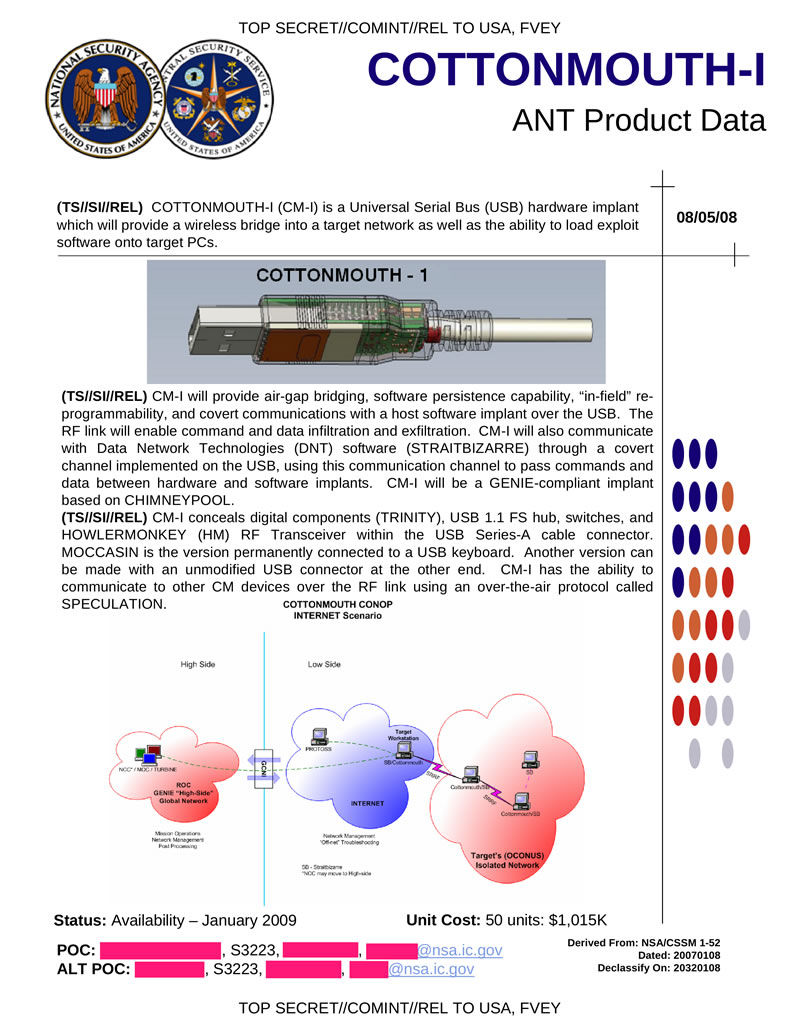

FIREWALK is the codename or cryptonym of a covert implant, developed around 2007 by or on behalf of the US National Security Agency (NSA) as part of their ANT product portfolio. The device is implanted into the RJ45 socket of the Ethernet interface of a PC or a network peripheral, and can intercept bidirectional gigabit ethernet traffic and inject data packets into the target network.

FIREWALK is the codename or cryptonym of a covert implant, developed around 2007 by or on behalf of the US National Security Agency (NSA) as part of their ANT product portfolio. The device is implanted into the RJ45 socket of the Ethernet interface of a PC or a network peripheral, and can intercept bidirectional gigabit ethernet traffic and inject data packets into the target network.

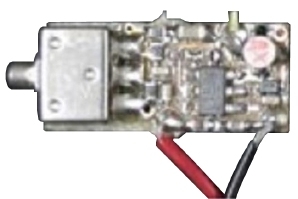

The implant is housed inside a regular stacked RJ45/twin-USB socket, such as the one shown in the image on the right. At the top are two LEDs and inside are the ethernet transformer and in some cases even an Ethernet Phy (eg. Broadcom).

NSA was able to manipulate this standard off-the-shelf computer part – probably somewhere in the supply chain or directly at the factory where the product was assembled – and replace the internal electronics by a miniature ARM9 / FPGA computer platform, named TRINITY [2].

Also implanted inside the socket, is a miniature wideband radio frequency (RF) tranceiver, named HOWLERMONKEY. It allows the implant to bypass an existing firewall or air gap protection [3].

The implant is suitable for 10/100/1000 Mb (gigabit) networks and intercepts all network traffic, with is then sent through a VPN tunnel, using the HOWLERMONKEY RF module. If the distance between the target network and the node to the Remote Operations Center (ROC) is too large, other implants in the same building may be used to relay the signal. The implant can also be used to insert data packets into the target network. The diagram below shows the construction.

At the left are the RJ45 and twin-USB sockets, with two LED indicators at the top. Immediately behind the sockets is a PCB with the power circuitry. At the back is the actual NSA FIREWALK implant, which is built around a TRINITY multi-chip module, consisting of an 180 MHz ARM9 microcontroller, an FPGA with 1 million gates, 96 MB SDRAM and 4 MB Flash memory. The latter contains the firmware, which can be tailored for a specific application or operation. In practice, the firmware would filter the network packets and relay the desired ones to the NSA’s ROC, using a nearby RF node (outside the building) and the internet to transport the intercepted data [1].

The above information was taken from original NSA datasheets from January 2007, that were disclosed to the press in 2013 by former CIA / NSA contractor Edward Snowden. The items were developed by, or on behalf of, the cyber-warfare intelligence-gathering unit of the NSA, known as The Office of Tailored Access Operations (TAO), since renamed Computer Network Operations [4].

Cryptonyms

All products in the ANT catalogue are identified by a codeword or cryptonym, which is sometimes abbreviated. At present, the following ANT cryptonyms are known:

NSA Spy Gear

- ANGRYNEIGHBOR

- CANDYGRAM

- CROSSBEAM

- CTX4000

- CYCLONE Hx9

- DEITYBOUNCE

- DROPOUTJEEP

- EBSR

- ENTOURAGE

- FEEDTROUGH

- GENESIS

- GINSU

- GODSURGE

- GOPHERSET

- COTTONMOUTH-I

- COTTONMOUTH-II

- COTTONMOUTH-III

- FIREWALK

- GOURMETTROUGH

- HALLUXWATER

- HEADWATER

- HOWLERMONKEY

- IRATEMONK

- IRONCHEF

- JETPLOW

- JUNIORMINT

- LOUDAUTO

- MAESTRO-II

- MONKEYCALENDAR

- NEBULA

- NIGHTSTAND

- NIGHTWATCH

- PHOTOANGLO

- PICASSO

- RAGEMASTER

- SCHOOLMONTANA

- SIERRAMONTANA

- SOMBERKNAVE

- SOUFFLETROUGH

- SPARROW II

- STUCCOMONTANA

- SURLYSPAWN

- SWAP

- TOTECHASER

- TOTEGHOSTLY

- TAWDRYYARD

- TRINITY

- TYPHON HX

- WATERWITCH

- WISTFULTOLL

Room surveillance

- CTX-4000

- LOUDAUTO

- NIGHTWATCH

- PHOTOANGLO

- TAWDRYYARD

Documentation![]()

![]()

- NSA, ANT Product catalog

8 January 2007. Obtained from [2].

References

- IC off the Record, The NSA Toolbox: ANT Product Catalog

29-30 December 2013. - Jacob Appelbaum, Judith Horchert, Christian Stöcker, Catalogue Advertises NSA Toolbox

Spiegel Online. 29 December 2013. - Wikipedia, NSA ANT catalog

Retrieved November 2020. - Wikipedia, Tailored Access Operations

Retrieved November 2020. - James Bamford, Commentary: Evidence points to another Snowden at the NSA

Reuters, 22 August 2016.

Further information

Backdoors – Exploitable weaknesses in a cipher system

Deliberate weakening of a cipher system, commonly known as a backdoor, is a technique that is used by, or on behalf of, intelligence agencies like the US National Security Agency (NSA) – and others – to make it easier for them to break the cipher and access the data. It is often thought that intelligence services have a Master Key that gives them instant access to the data, but in reality it is often much more complicated, and requires the use of sophisticated computing skills.

In the past, intelligence services like the NSA weakened the ciphers just enough to allow it to be barely broken with the computing power that was available to them (e.g. by using their vast array of Cray super computers), assuming that other parties did not have that capability. Implementing a backdoor is difficult and dangerous, as it might be discovered by the user — after which it can no longer be used — or by another party, in which case it can be exploited by an adversary.

Below is a non-exhaustive overview of known backdoor constructions and examples:

- Weakening of the encryption algorithm

- Weakening the KEY

- Hiding the KEY in the cipher text

- Manipulation of user instructions (manual)

- Key generator with predictive output

- Implementation of a hidden ‘unlock’ key (master key)

- Key escrow

- Side channel attack (TEMPEST)

- Unintended backdoors

- Covertly installed hard- and/or software (spyware)

Weakening of the algorithm

One of the most widely used types of backdoor, is weakening of the algorithm. This was done with mechanical cipher machines – such as the CX-52 – electronic ones – such as the H-460 – and software-based encryption. NSA often weakened the algorithm just enough to break it with the help from a super computer (e.g. Cray), assuming that adversaries did not have that capacity.

This solution is universal. It can be applied to mechanical, electronic and computer-based encryption systems. One of the first known examples is the weakening of the Hagelin CX-52 by Peter Jenks of the NSA, in the early 1960s [1].

The Hagelin CX-52 had the problem that it was theoretically safe when used correctly. It was possible however to configure the device in such a way that it produced a short cycle, as a result of which it became easy to break. Jenks modified the CX-52 in such a way that it always produced a long cycle, albeit one that he could predict.

The modified product was designated CX-52M and was marketed by Crypto AG as a new version with improved security, which customers immediately started ordering in quantities. He repeated the exercise in the mid-1960s, when Crypto AG moved from mechanical to electronic designs.

The first electronic cipher machines were built around (non)linear feedback shift registers – LFSR or NLFSR – built with the (then) newest generation of integrated circuits (ICs). This part is commonly known as the crypto heart or the cryptologic. Jenks manipulated the shift registers in such as way that it seemed robust from the outside. Nevertheless NSA could break it, as they knew the exact nature of the built-in weakness.

Manipulating the cryptologic, or actually the cryptographic algorithm, requires quite some mathematical ingenuity, and is not trivial at all.

During the 1970s, the weaknesses were discovered by several (unwitting) Crypto AG employees and even by customers. Crypto AG usually fended them off with the excuse that the algorithm had been developed a long time ago, and that an improved version would be released soon. It should be no surprise that hiding the weaknesses became increasingly difficult over the years.

The same principle can be applied to software-implementations of cryptographic algorithms as well, but it has become extremely difficult to do that in such a way that it passes existing tests, such as NIST entropy-tests, and can withstand the peer review of the academic community.

Another popular method for weakening a cipher system, is by shortening the effective length of the crypto KEY. The length is typically specified in bits, and in the 1980s, the keys of military cipher systems were typically 128 bits long, which was about twice the length that was needed.

The DES encryption algorithm – that was used for bank transactions – had a key length of 56 bits. It had been developed by Horst Feistel at IBM as Lucifer and had been improved by NSA.

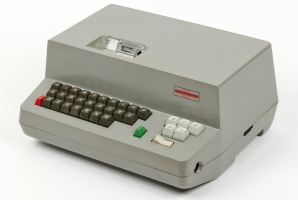

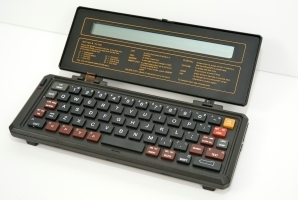

In 1983, the small Dutch company Text Lite, introduced the small PX-1000 pocket terminal shown in the image on the right. It had a built-in text editor and an acoustic modem, by which texts could be uploaded in seconds. The device used DES encryption for the protection of the text messages, which was thought to be useful for journalists and business men on the move.

DES was considered secure at the time. Although it might have been breakable by NSA, doing so would cost a lot of resources (i.e. computing power). With DES available in a consumer product for an affordable price, NSA faced a serious problem, and turned to Philips Usfa

for assistence.Philips bought the entire stock of

DES-enabled devices and shipped it to the US. The product was then re-released under the Philips brand, with an algorithm that was supplied by NSA.

The new algorithm was a stream cipher with a key-length of no less than 64 bits. This is more than the 56 bits of DES, and suggested that it was a least a strong as DES, and probably even stronger. By reverse engineering the algorithm, Crypto Museum has meanwhile concluded that of the 64 key bits, only 32 are significant. This means that the key has effectively been halved.

Does this mean that it takes only half the time to break the key? No, as each key-bit doubles the number of combinations, removing 32 bits means that it has become 4,294,967,296 times easier to break the key (232). For example: if we assume that it takes one full year to break a 64-bit key, breaking a 32-bit key would take just 0.007 seconds. A piece of cake for NSA‘s super computers.

Hide the KEY in the ciphertext

It is sometimes suggested that the cryptographic key might be hidden in the output stream (i.e. in the cipher text). Not in a readable form, of course, but when you known where to look, the key will reveal itself. Although this method is prone to discovery it has in fact been used in the past.

A good example of this technique is the Hagelin CSE-280 voice encryptor, that was introduced by Crypto AG in the early 1970s. The product had been developed in cooperation with the German cipher authority ZfCh (part of the BND), and used forward synchronisation, to allow late entry sync.

The key was hidden in the preample that was inserted at the beginning of each transmission. If one knew where to look, the entire key could be reconstructed. A few years after the device had been introduced, Crypto AG‘s chief developer Peter Frutiger suddenly realised how it was done.

The key was hidden in the preample that was inserted at the beginning of each transmission. If one knew where to look, the entire key could be reconstructed. A few years after the device had been introduced, Crypto AG‘s chief developer Peter Frutiger suddenly realised how it was done.

It was only a matter of time before customers would discover it too. In 1976, the Syrians became aware of the (badly hidden) key in the preamble, and notified Crypto AG, where Frutiger provided them with a fix that made it instantly unbreakable. NSA was furious and Frutiger got fired for this.

The exploit was based on redundancy in the enciphered message preamble. It caused a bias which was an unnecessary shortcoming by design. It involved solving a set of binary equasions, an exponentially large number of times, for which the special purpose device was developed.More bout Aroflex

The exploit was based on redundancy in the enciphered message preamble. It caused a bias which was an unnecessary shortcoming by design. It involved solving a set of binary equasions, an exponentially large number of times, for which the special purpose device was developed.More bout Aroflex

Rigging the manualIn some cases, the cipher can be weakened by manipulating the manual. This was done for example with the manuals of the Hagelin CX-52 machine. Although the CX-52 was in theory a virtually unbreakable machine, it could be set up accidentally in such a way, that it produced a short cycle (period), which was easy to break.By manipulating the manual, guidelines were given for ‘proper’ use of the machine, but in reality the user was instructed to configure the machine in such a way that it generated a short cycle, which was easy to break by the NSA.

Another example of hiding hints in the output stream, is the T-1000/CA, internally known as Beroflex, that was the civil version of the NATO-approved Aroflex, a joint development of Philips and Siemens. It was based on a T-1000 telex.

Whilst the Aroflex was highly secure, Beroflex (T-1000/CA) was not. With the right means and the right knowledge, it could be broken. This was not a trivial task however, and required the use of a special purpose device – a super chip – that had been co-developed by experts at the codebreaking division of the Royal Dutch Navy.

Key generator with predictive outputMany encryption systems, old and new alike, make use of KEY-generators – commonly pseudo random number generators, or PRNGs – for example for the generation of unique message keys, for generating private and public keys, and for generating the key stream in a stream cipher.By manipulating the key generator, it is theoretically possible to generate predictable keys, weak keys or predicatable cycles. Examples are the mechanical key generator of the Hagelin CX-52M, but also the software-based random number generators (RNGs) in modern software algorithms.Creating this kind of weaknesses is neither simple nor trivial, as the weakened key generator has to withstand a variety of existing entropy tests, including the ones published by the US National Institute of Standards and Technology (NIST). Nevertheless, various (potential) backdoors based on weakened PRNGs have been reported in the press, some of which are attributed to the NSA.In December 2013, Reuters reported that documents released by Edward Snowden indicated that NSA had payed RSA Security US$ 10 million to make Dual Elliptic Curve Deterministic Random Bit Generator (Dual_EC_DRBG) the default in their encryption software. It had already been proven in 2007, that constants could be constructed in such a way as to create a kleptographic backdoor in the NIST-recommended Dual_EC_DRBG [3]. It had been deliberately inserted by NSA as part of its BULLRUN decryption program. NIST promptly withdrew Dual_EC_DRBG from its draft guidance [4].➤ Wikipedia: Random number generator attack

➤ Wikipedia: Dual_EC_DRBG

It is often thought by the general public, that intelligence agencies have something like a magic password, or master key, that gives them instant access to secure communications of a subject. Although in most cases the backdoor mechanism is far more complex, it is technically possible.An example of a possible master key, is the so-called_NSAKEY that was found in a Microsoft operating system in 1999. The variable contained a 1024-bit public key, that was similar to the cryptographic keys that are used for encryption and authentication. Although Microsoft firmly denied it, it was widely speculated that the key was there to give the NSA access to the system.There are however a few other possible explanations for the presence of this key — including a backup key, a key for installing NSA proprietary crypto suites, and incompetence on the part of Microsoft, NSA or both — all of which seem plausible. In addition, Dr. Nicko van Someren found a third – far more obscure – key in Windows 2000, which he doubted had a legitimate purpose [5].➤ Wikipedia: _NSAKEY

A good example of KEY ESCROW is the so-called Clipper Chip, that was introduced by the NSA in the early 1990s, in an attempt to control the use of strong encryption by the general public.It was the intention to use this chip in all civil encryption products, such as computers, secure telephones, etc., so that everyone would be able to use strong encryption. By forcing people to surrender their keys to the (US) government, law enforcement agencies had the ability to decrypt the communication, should that prove to be necessary during the course of an investigation.

Encryption systems are often attacked by adversaries, by exploiting information that is hidden in the so-called side channels. This is known as a side channel attack. In most cases, side channels are unintended, but they may have been inserted deliberately to give an eavesdropper a way in.Side channels are often unwanted emanations – such as radio frequency (RF) signals that are emitted by the equipment, or sound generated by a printer or a keyboard – but may also take the form of variations in power consumption (current) that occur when the device is in use (power analysis). In military jargon, unwanted emanations are commonly known as TEMPEST.An early example of a cryptographic device that exhibited exploitable TEMPEST problems, is the Philips Ecolex IV mixer shown in the image on the right, which was approved for use by NATO.As it was based on the One-Time Tape (OTT) principle, it was theoretically safe. However, in the mid-1960s, the Dutch national physics laboratory TNO, proved that minute glitches in the electric signals on the teleprinter data line, could be exploited to reconstruct the original plaintext. The problem was eventually soved by adding filters between the device and the teleprinter line.➤ Wikipedia: Side-channel attack <Unintended weaknessesBackdoors can also be based on unintentional weaknesses in the design of an encryption device. For example, the Enigma machine – used during WWII by the German Army – can not encode a This and other weaknesses greatly helped the codebreakers at Bletchley Park, and allowed the cipher to be broken throughout World War II.Unintended weaknesses were also present in the early mechanical cipher machines of Crypto AG (Hagelin), such as the C-36, M-209, C-446 and CX-52. Although they were theoretically strong, they could accidentally be setup in such a way that they produced a short cycle, which could be broken much more easily. Similar properties can be found in the first generations of electronic crypto devices that are based on shift-registers.

Encryption systems are often attacked by adversaries, by exploiting information that is hidden in the so-called side channels. This is known as a side channel attack. In most cases, side channels are unintended, but they may have been inserted deliberately to give an eavesdropper a way in.Side channels are often unwanted emanations – such as radio frequency (RF) signals that are emitted by the equipment, or sound generated by a printer or a keyboard – but may also take the form of variations in power consumption (current) that occur when the device is in use (power analysis). In military jargon, unwanted emanations are commonly known as TEMPEST.An early example of a cryptographic device that exhibited exploitable TEMPEST problems, is the Philips Ecolex IV mixer shown in the image on the right, which was approved for use by NATO.As it was based on the One-Time Tape (OTT) principle, it was theoretically safe. However, in the mid-1960s, the Dutch national physics laboratory TNO, proved that minute glitches in the electric signals on the teleprinter data line, could be exploited to reconstruct the original plaintext. The problem was eventually soved by adding filters between the device and the teleprinter line.➤ Wikipedia: Side-channel attack <Unintended weaknessesBackdoors can also be based on unintentional weaknesses in the design of an encryption device. For example, the Enigma machine – used during WWII by the German Army – can not encode a This and other weaknesses greatly helped the codebreakers at Bletchley Park, and allowed the cipher to be broken throughout World War II.Unintended weaknesses were also present in the early mechanical cipher machines of Crypto AG (Hagelin), such as the C-36, M-209, C-446 and CX-52. Although they were theoretically strong, they could accidentally be setup in such a way that they produced a short cycle, which could be broken much more easily. Similar properties can be found in the first generations of electronic crypto devices that are based on shift-registers.

It had to be assumed that the (US) government could be trusted under all circumstances, and that sufficient mechanisms were in place to avoid unwarranted tapping and other abuse, which was heavily disputed by the Electronic Frontier Foundation (EFF) and other privacy organisations.

The device – which used the Skipjack algorithm – was not embraced by the public. In addition, it contained a serious flaw. In 1994, shortly after its introduction, (then) AT&T researcher Matt Blaze discovered the possibility to tamper the device in such a way that it offered strong encryption whilst disabling the escrow capability. And that was not what the US Government had in mind.

Cryptographic Key Escrow

The Clipper Chip was a cryptographic chipset developed and promoted by the US Government. It was intended for implementation in secure voice equipment, such as crypto phones, and required its users to surrender their cryptographic keys in escrow to the government. This would allow law enforcement agencies (CIA, FBI), to decrypt any traffic for surveillance and intelligence purposes. The controversial Clipper Chip was announced in 1993 and was already defunct by 1996 [1].

The physical chip was designed by Mykotronx (USA) and manufactured by VLSI Technology Inc. (USA). The initial cost for an unprogrammed chip was $16 and a programmed one costed $26.

The image on the right shows the Mykotronx MYK78T chip as it is present inside the AT&T’s TSD-3600-E telephone encryptor. The chip is soldered directly to the board (i.e. not socketed) and was thought to be tamper-proof (see below). The AT&T TSD-3600 telephone encryptor was the first and only product that featured the ill-fated Clipper Chip before it was withdrawn.

in order to provide a level of protection against misuse of the key by law enforcement agencies, it was agreed that the Unit Key of each device with a clipper chip, would be held in escrow jointly by two federal agencies. This means that the actual Unit Key was split in two parts, each of which was given to one of the agencies. In order to reconstruct the actual Unit Key, the database of both agencies had to be accessed and the two half-Unit Keys had to be combined by bitwise XOR [3].

Skipjack Algorithm

The Clipper Chip used the Skipjack encryption algorithm for the transmission of information, and the Diffie-Hellman key exchange algorithm for the distribution of the cryptographic session keys between peers. Both algorithms are believed to provide good security.

The Skipjack algorithm was developed by the NSA and was classed an NSA Type 2 encryption product. The algorithm was initially classified as SECRET, so that it could not be examined in the usual manner by the encryption research community. After much debate, the Skipjack algorithm was finally declassified and published by the NSA on 24 June 1998 [2]. It uses an 80-bit key and a symmetric cipher algorithm, similar to DES.

Key Escrow

The heart of the concept was Key Escrow. Any device with a Clipper Chip inside (e.g. a crypto phone) would be assigned a cryptographic key, which would be given to the government in escrow. The user would then assume the government to be the so-called trusted third party. If government agencies “established their authority” to intercept a particular communication, the key would be given to that agency, so that all data transmitted by the subject could be decrypted.

The concept of Key Escrow raised much debate and became heavily disputed. The Electronic Frontier Foundation (EFF), established in 1990, preferred the term Key Surrender to stress what, according to them, was actually happening. Together with other public interest organizations, such as the Electronic Privacy Information Center, the EFF challenged the Clipper Chip proposal, saying that it would be illegal and also ineffective, as criminals wouldn’t use it anyway.

In response to the Clipper Chip initiative by the US Government, a number of very strong public encryption packages were released, such as Nautilus, PGP and PGPfone. It was thought that, if strong cryptography was widely available to the public, the government would be unable to stop its use. This approach appeared to be effective, causing the premature ‘death’ of the Clipper Chip, and with it the death of Key Escrow in general.

In 1993, AT&T Bell produced the first and only telephone encryptor based on the Clipper Chip: the TSD-3600. A year later, in 1994, Matt Blaze, a researcher at AT&T, published a major design flaw in the Escrowed Encryption System (EES). A malicious party could tamper the software and use the Clipper Chip as an encryption device, whilst disabling the key escrow capability.

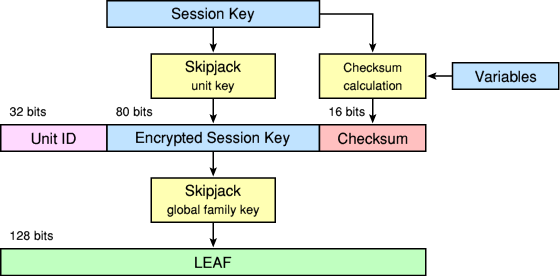

When establishing a connection, the Clipper Chip transmits a 128-bit Law Enforcement Access Field (LEAF). The above diagram shows how the LEAF was established. The LEAF contained information needed by the intercepting agency to establish the encryption key.

To prevent the software from tampering with the LEAF, a 16-bit hash code was included. If the hash didn’t match, the Clipper Chip would not decrypt any messages. The 16-bit hash however, was too short to be safe, and a brute force attack would easily produce the same hash for a fake session key, thus not revealing the actual keys [3] . If a malicious user would tamper the device’s software in this way, law enforcement agencies would not be able to reproduce the actual session key. As a result, they would not be able to decrypt the traffic.

Interior![]()

![]()

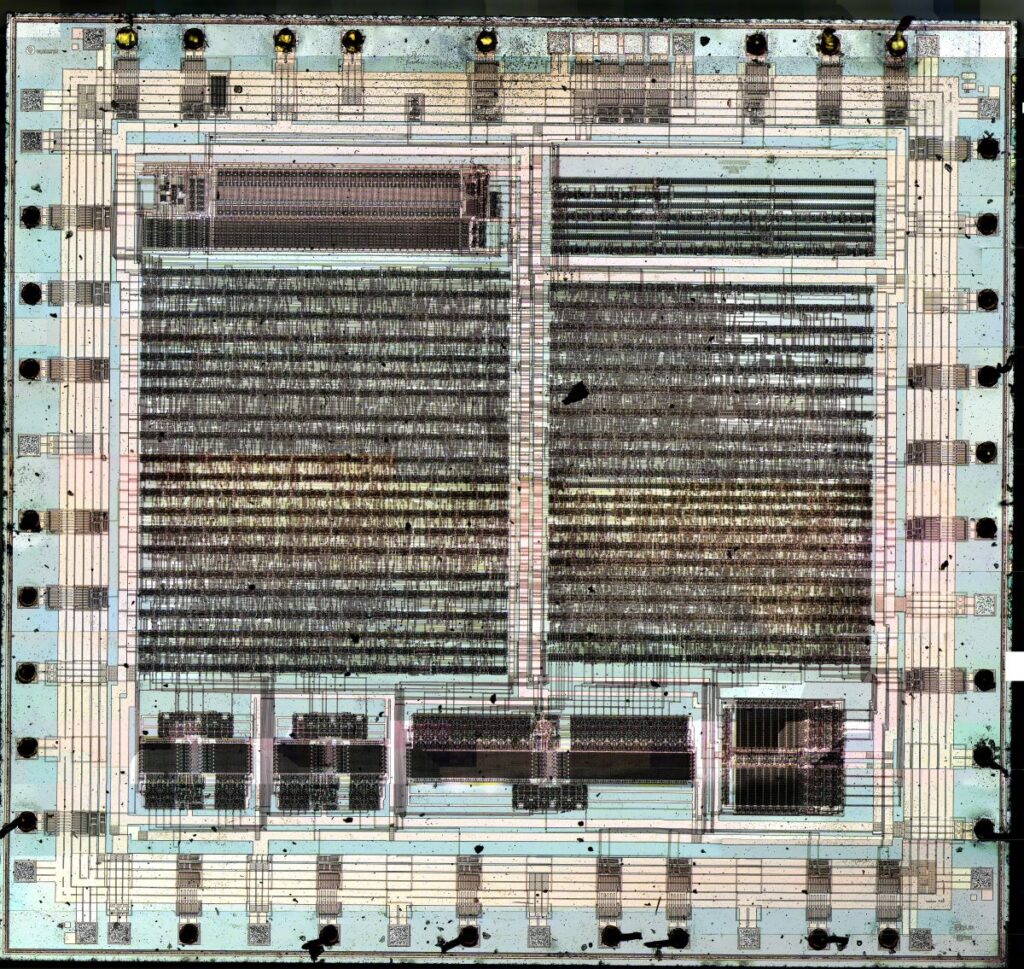

Since the Clipper-project has failed, we think it is safe to show you the contents of the chip. Although this is something we would not normally do, this one is too good to be missed. Below, Travis Goodspeed shows us how easy it is to open the package and reverse-engineer a chip [4]. Luckily, according to Kerckhoff’s principle, the secret is in the key and not in the device [5].

The black dots along the four edges are the connection pads of the chip. The image was publised on Travis’ photostream on Flickr and is reproduced here with his kind permission. Click the image for a hi-res version. Note that this is a large file (18MB) which may take some time to download.

In some cases, the safety doctrine that is intended to make the device more secure, actually makes the cipher weaker. For example: during WWII, the German cipher authority dictated that a particular cipher wheel should not be used in the same position on two successive days. Whilst this may seem like a good idea, it effectively reduces the maximum number of possible settings.

In some cases, the safety doctrine that is intended to make the device more secure, actually makes the cipher weaker. For example: during WWII, the German cipher authority dictated that a particular cipher wheel should not be used in the same position on two successive days. Whilst this may seem like a good idea, it effectively reduces the maximum number of possible settings.

By far the most common of the unintended weaknesses is operator error, such as choosing a simple or easy to guess password, sending multiple messages on the same key, sending the same message on two different keys, etc. Here are some examples of unintended weaknesses

With a special key combination, the key logger can be turned in a USB memory stick, from which the logged data can be recovered by a malicious party. A more sophisticated example of covert hardware, is the addition of a (miniature) chip on the printed circuit board of an existing device. As many companies today have outsourced the production of their electronics, there is always a possibility that it might be maliciously modified by a foreign party. This is particularly the case with critical infrastructure like routers, switches and telecommunications backbone equipment. This problem is enhanced by the increasing complexity of modern computers, as a result of which virtually no one knows exactly how it works. A good example is the tiny computer that is hidden inside Intel’s AMT processors, and that has been actively exploited as a spying tool [6].

- Crypto Museum, Operation RUBICON

February 2020. - Wikipedia, Backdoor (computing)

Retrieved February 2020 - Wikipedia, Random number generator attack

Retrieved February 2020 - Wikipedia, Dual_EC_DRBG

Retrieved February 2020 - Wikipedia, _NSAKEY

Retrieved February 2020 - Wikipedia, Intel_Active Management Technology

Retrieved November 2020. - Adding a small chip to the board (can only be done during production process)

- Adding a regular component with a built-in chip ➤ e.g. NSA’s FIREWALK

- Tiny computer inside a regular processor ➤ e.g. Intel AMT

- External key logger (USB or PS2)

- Key logger (spy) software

- Computer viruses

- Supply chain attack

- Weak keys

- A letter can not encode into itself (Enigma)

- False security measures

- Operator mistakes

- Software bugs

Another way of getting surreptitious access to a computer system, such as a personal computer, is by covertly installing additional hardware or software that gives an adversary direct or indirect access to the system and its data. Spyware can be visible, but can also be completely invisible.

Another way of getting surreptitious access to a computer system, such as a personal computer, is by covertly installing additional hardware or software that gives an adversary direct or indirect access to the system and its data. Spyware can be visible, but can also be completely invisible.

An example of a hidden-in-plain-sight device is a so-called key logger that can be installed between keyboard and computer. The image on the right shows two variants: one for USB (left) and one for the old PS-2 keyboard interface.

Items like these can easily be installed in an office – for example by the cleaning lady – and are hardly noticed in the tangle of wires below your desk. It registers every key stroke, complete with time/date stamp, including your passwords. If the cleaning lady removes it a few days later, you will never find out that it was ever installed.

Manipulated hardware can be used to eavesdrop on your data, but can also be used as part of a Distributed Denial of Service attack (DDoS), or to disrupt the critical infrastructure of a company or even an entire country. In many cases, such attacks are carried out by (foreign) state actors. Manipulation of hardware is also possible by adding a secret chip to a regular inconspicuous component. A good example is the FIREWALK implant of the US National Security Agency (NSA) that is hidden inside a regular RJ45 Ethernet socket of a computer. It is used by the NSA to spy behind firewalls and was disclosed by former CIA / NSA-contractor Edward Snowden in 2013.

This device is particularly dangerous as it can not be found with a visual inspection. Furthermore, it transmits the intercepted data via radio waves and effectively bypasses all security.

Is this problem restricted to high-end (computing) devices? Certainly not. Most modern domestic appliances, such as smart thermomenters, smart meters, domotica and in particular devices for the Internet of Things (IoT), are badly built, contain badly written software and are rarely properly protected, as a result of which they are extremely vulnerable to manipulation (hacking).

- NSA-backdoored equipment info found OFF this website

- U.S. Government Catalogue of Cellphone Surveillance Devices

- Backdoors on Wikipedia

- National Security Agency

- Central Intelligence Agency

- NSA EXTRACTED INFO

- CRYPTO MUSEUM

- Edward Snowden

- Stingray

- Pegasus Spyware

- X-Keyscore