Arm Sets IPO Price at $51 a Share

Arm set a price of $51 a share as the British chip designer lays the groundwork for the biggest U.S. public offering of the year.

The price was decided on after meetings Wednesday afternoon between underwriters and company executives, according to people familiar with the matter. Initially, the company was eyeing a price of $52 a share, but later settled on $51.

At that price, Arm would be valued at $54.5 billion on a fully diluted basis. That is below the $64 billion Arm owner SoftBank Group recently valued the company at when it bought out a stake held by its Vision Fund.

Arm shares are set to start trading Thursday on Nasdaq under the symbol ARM.

The pricing and trading will be closely watched for signals of the health of the new-issue market, which has been in the doldrums since last year, the slowest for traditional IPOs in the U.S. in at least two decades, as rising interest rates and inflation deterred investors from riskier investments.

If Arm’s stock trades well, it could be a boost for grocery-delivery company Instacart and marketing-automation platform Klaviyo, both of which are planning listings of their own next week.

SoftBank, the only seller in the offering, is set to raise about $5 billion. The Japanese technology investor had planned to sell shares at a price between $47 and $51 apiece. In a sign of the importance of the deal to SoftBank, its chief executive, Masayoshi Son, was expected to attend Wednesday’s pricing meeting virtually.

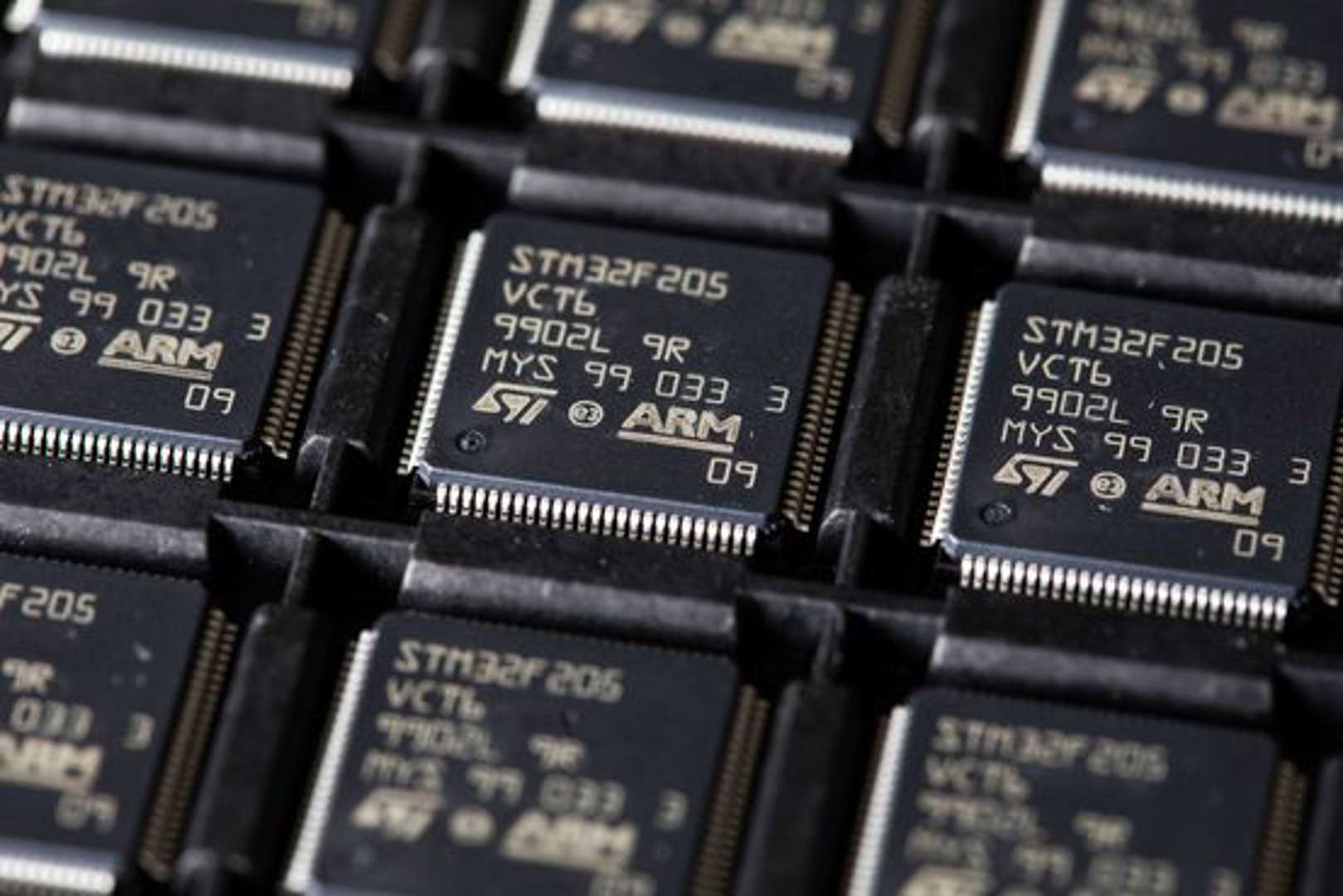

Arm doesn’t make chips, but supplies chip makers with essential circuit designs. Founded in 1990, it focused on the nascent mobile-phone market in its early years and became a dominant supplier to that industry.

Investors in the company are betting that Arm will be able to generate more sales from its current customers and venture into new markets. The company’s circuitry is in more than 99% of smartphones, but it is seeking to make inroads in areas where it is less dominant, including computer networks, cloud-computing and the automotive industry.

The company is also trying to seize on an explosion of interest in artificial intelligence and language-generation systems such as OpenAI’s ChatGPT. AI systems require large amounts of computing power to create and deploy, offering chip makers and suppliers including Arm a huge new market to tap.

Shares in Nvidia, the chip maker at the center of the AI boom, have more than tripled this year, vaulting the company’s value past $1 trillion. Nvidia is incorporating Arm’s circuit designs into some of its coming chips, although Arm isn’t yet at the center of the AI craze. source

A Brief History of Arm: Part 1

One of the things I have noticed about Arm over the last year that I have been working here is people having a great interest in Arm’s history. After a quick Google search and multiple open tabs I realized that there was much debate and comments on the actual history of Arm.

You can easily attain the timeline of events of Arm as a company, but it doesn’t really tell the story of how Arm came into existence and how it rose to the top of its respected industry. It does however give you a full timeline of licensees of Arm and the key moments in the company’s history. Please join in the debate in the comment section if you feel there is more to add or possible topics you would like to see researched in further blog entries. Also don’t be afraid to comment with corrections or extra information from the era of 1980-1997 of Part 1 is based.

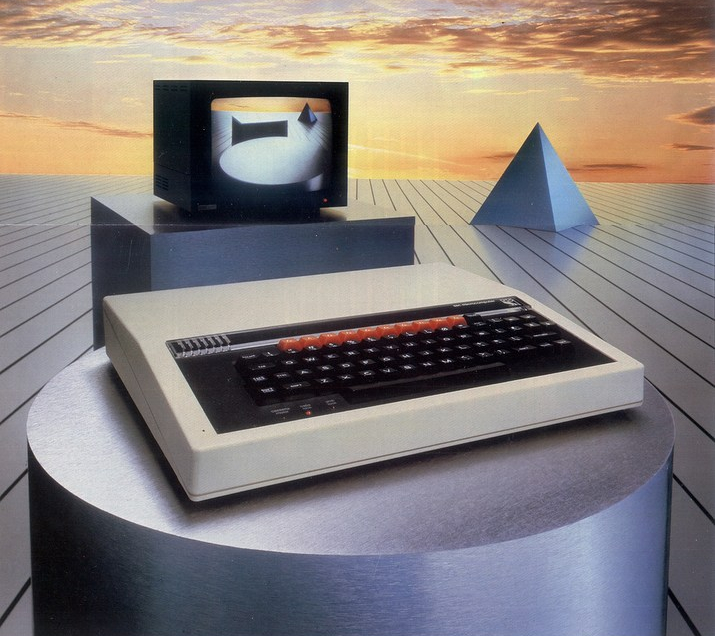

The Beginning: Acorn Computers Ltd

Any British person the age of 30 will most likely remember Acorn Computers Ltd and the extremely popular BBC Micro (launched with a 6502 processor in 1981). The background of Acorn is a very interesting story in itself and probably deserves its own blog, but is set in the booming computer industry in the 1980’s. The founders of Acorn Computers Ltd were Christopher Curry and Herman Hauser. Chris Curry was known for working closely with Clive Sinclair of Sinclair Radionics Ltd for over 13 years. After some financial trouble Sinclair sought government help, but when he lost full control of Sinclair Radionics he started a new venture called Science of Cambridge Ltd or later known as Sinclair Research Ltd. Chris Curry was one of the main people in the new venture, but after a disagreement with Sinclair on the direction of the company, Curry decided to leave Sinclair Computers Ltd.

Curry soon partnered with Herman Hauser, an Austrian PhD of Physics who had studied English in Cambridge at the age of 15 and liked it so much, returned for his PhD. Together they set up CPU Ltd which stood for Cambridge Processing Unit which had such products as microprocessor controllers for fruit machines which could stop crafty hackers from getting big pay outs from the machine. They launched Acorn Computers as the trading name of CPU to keep the two ventures separate. Apparently, the reasoning behind the naming of Acorn was to be ahead of Apple computers in the telephone directory!

Fast forward a few years and they landed a fantastic opportunity to produce the BBC Micro, a government initiative to put a computer in every classroom in Britain. Sophie Wilson, and Steve Furber were two talented computer scientists from the University of Cambridge who were given the wonderful task of coming up with the microprocessor design for Acorn’s own 32 bit processor – with little to no resources. Therefore the design had to be good, but simple – Sophie developed the instruction set for the Arm1 and Steve worked on the chip design. The first ever Arm design was created on 808 lines of Basic and citing a quote from Sophie from a telegraph interview; ‘We accomplished this by thinking about things very, very carefully beforehand’. Development on the Acorn RISC Machine didn’t start until some time around late 1983 or early 1984. The first chip was delivered to Acorn (then in the building we now know as Arm2) on 26th April 1985. The 30th birthday of the architecture is this year! The Acorn Archimedes which was released in 1987, was the first RISC based home computer.

Arm is founded

Arm back then stood for ‘Advanced RISC Machines’ or ‘Acorn RISC Machines’ depending on who you ask, but to answer the age old question asked by many people these days, it actually doesn’t stand for anything – as the machines they were named after are long but outdated, Arm continued with its name – which funnily enough, means nothing! It does have a cool retro logo though!

The Arm logo used until IPO in 1999.

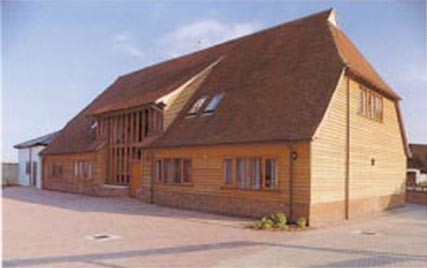

The company was founded in November 1990 as Advanced RISC Machines Ltd and structured as a joint venture between Acorn Computers, Apple Computer (now Apple Inc.) and VLSI Technology. The reason for this was because Apple wanted to use Arm technology but didn’t want to base a product on Acorn IP – who, at the time were considered a competitor. Apple invested the cash, VLSI Technology provided the tools, and Acorn provided the 12 engineers and with that Arm was born, and its luxury office in Cambridge – A barn!

The Arm headquarters – snazzy looking barn

In an earlier venture, Hermann Hauser had also created the Cambridge Processor Unit or CPU. While at Motorola, Robin Saxby supplied chips to Hermann at CPU. Robin was interviewed and offered the job as CEO around 1991. In 1993 the Apple Newton was launched on Arm architecture. For anyone that has ever used an Apple Newton you will know it wasn’t the best piece of technology, as unfortunately Apple over reached for the technology that was available for them at the time – the Newton has flaws which lowered its usability vastly. Due to these factors Arm realized they could not sustain success on single products, and Sir Robin introduced the IP business model which wasn’t common at the time. The Arm processor was licensed to many semiconductor companies for an upfront license fee and then royalties on production silicon. This made Arm a partner to all these companies, effectively trying to speed up their time to market as it benefited both Arm and its partners. For me personally, this model was one that was never taught to us in school, and doesn’t really show its head in the business world much, but it creates a fantastic model of using Arm architecture in a large ecosystem – which effectively helps everyone in the industry towards a common goal; creating and producing cutting edge technology.

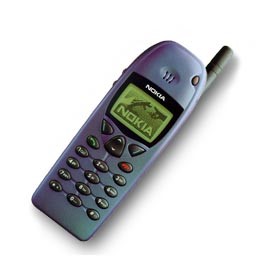

TI, Arm7, and Nokia

The crucial break for Arm came in 1993 with Texas Instruments (TI). This was the breakthrough design that gave Arm credibility and proved the successful viability of the company’s novel licensing business model. The deal drove Arm to formalize their licensing business model and also drove them to make more cost-effective products. Such deals with Samsung and Sharp proved networking within the industry was crucial in infecting enthusiastic support for Arm’s products and in gaining new licensing deals. These licensing deals also led to new opportunities for the development of the RISC architecture. Arm’s relatively small size and dynamic culture gave it a response-time advantage in product development. Arm’s hard work came to fruition in 1994, during the mobile revolution when realistic small mobile devices were a reality. The stars aligned and Arm was in the right place at the right time. Nokia were advised to use Arm based system design from TI for their upcoming GSM mobile phone. Due to memory concerns Nokia were against using Arm because of overall system cost to produce. This led to Arm creating a custom 16 bit per instruction set that lowered the memory demands, and this was the design that was licensed by TI and sold to Nokia. The first Arm powered GSM phone was the Nokia6110 and this was a massive success. The Arm7 became the flagship mobile design for Arm and has since been used by over 165 licensees and has produced over 10 Billion chips since 1994.

Nokia 6110 – the first Arm powered GSM phone (You may remember playing hours of the game snake!)

Going Public

By the end of 1997, Arm had grown to become a £26.6m private business with £2.9m net income and the time had come to float the company. Although the company had been preparing to float for three years, the tech sector was in a bubble at the time and everyone involved was very apprehensive, but felt it was the right move for the company to capitalize on the massive investment in the tech sector of the time.

On April 17th, 1998, Arm Holdings PLC completed a joint listing on the London Stock Exchange and NASDAQ with an IPO at £5.75. The reason for the joint listing was twofold. First, NASDAQ was the market through which Arm believed it would gain the sort of valuation it deserved in the tech bubble of the time which was mainly based out of the states. Second, the two major shareholders of Arm were American and English, and Arm wished to allow existing Acorn shareholders in the UK to have continued involvement. Arm going public caused the stock to soar and turned the small British semiconductor design company into a Billion Dollar company in a matter of months!

ARM Holdings was publicly listed in early 1998

A history of ARM, part 1: Building the first chip

In 1983, Acorn Computers needed a CPU. So 10 people built one.

It was 1983, and Acorn Computers was on top of the world. Unfortunately, trouble was just around the corner.

The small UK company was famous for winning a contract with the British Broadcasting Corporation to produce a computer for a national television show. Sales of its BBC Micro were skyrocketing and on pace to exceed 1.2 million units.

But the world of personal computers was changing. The market for cheap 8-bit micros that parents would buy to help kids with their homework was becoming saturated. And new machines from across the pond, like the IBM PC and the upcoming Apple Macintosh, promised significantly more power and ease of use. Acorn needed a way to compete, but it didn’t have much money for research and development.

A seed of an idea

Sophie Wilson, one of the designers of the BBC Micro, had anticipated this problem. She had added a slot called the “Tube” that could connect to a more powerful central processing unit. A slotted CPU could take over the computer, leaving its original 6502 chip free for other tasks.

But what processor should she choose? Wilson and co-designer Steve Furber considered various 16-bit options, such as Intel’s 80286, National Semiconductor’s 32016, and Motorola’s 68000. But none were completely satisfactory.

In a later interview with the Computing History Museum, Wilson explained, “We could see what all these processors did and what they didn’t do. So the first thing they didn’t do was they didn’t make good use of the memory system. The second thing they didn’t do was that they weren’t fast; they weren’t easy to use. We were used to programming the 6502 in the machine code, and we rather hoped that we could get to a power level such that if you wrote in a higher level language you could achieve the same types of results.”

But what was the alternative? Was it even thinkable for tiny Acorn to make its own CPU from scratch? To find out, Wilson and Furber took a trip to National Semiconductor’s factory in Israel. They saw hundreds of engineers and a massive amount of expensive equipment. This confirmed their suspicions that such a task might be beyond them.

Then they visited the Western Design Center in Mesa, Arizona. This company was making the beloved 6502 and designing a 16-bit successor, the 65C618. Wilson and Furber found little more than a “bungalow in a suburb” with a few engineers and some students making diagrams using old Apple II computers and bits of sticky tape.

Suddenly, making their own CPU seemed like it might be possible. Wilson and Furber’s small team had built custom chips before, like the graphics and input/output chips for the BBC Micro. But those designs were simpler and had fewer components than a CPU.

Despite the challenges, upper management at Acorn supported their efforts. In fact, they went beyond mere support. Acorn co-founder Hermann Hauser, who had a Ph.D. in Physics, gave the team copies of IBM research papers describing a new and more powerful type of CPU. It was called RISC, which stood for “reduced instruction set computing.”

Taking a RISC

What exactly did this mean? To answer that question, let’s take a super-simplified crash course on how CPUs work. It starts with transistors, tiny sandwich-like devices made from silicon mixed with different chemicals. Transistors have three connectors. When a voltage is put into the gate input, it allows electricity to flow freely from the source input to the drain output. When there is no voltage on the gate, this electricity stops flowing. Thus, the transistor works as a controllable switch.

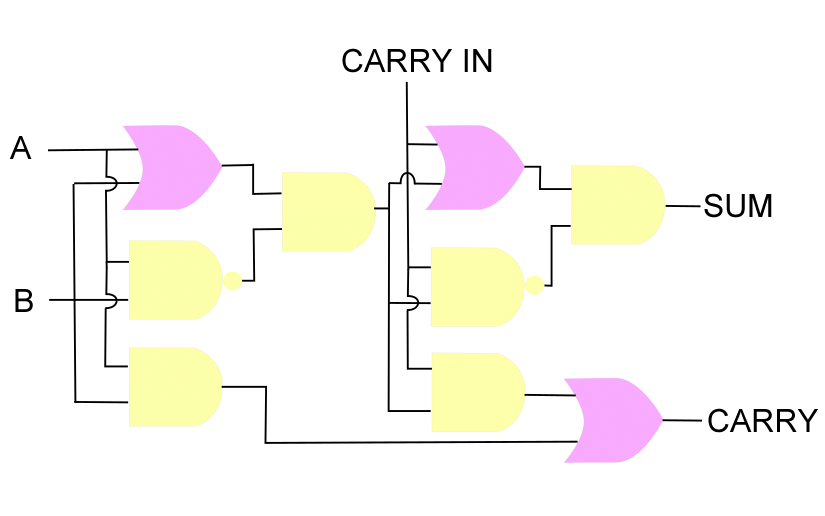

You can combine transistors to form logic gates. For example, two switches connected in a series make an “AND” gate, and two connected in parallel form an “OR” gate. These gates let a computer make choices by comparing numbers.

But how to represent numbers? Computers use binary, or Base 2, by equating a small positive voltage to the number 1 and no voltage to 0. These 1s and 0s are called bits. Since binary arithmetic is so simple, it’s easy to make binary adders that can add 0 or 1 to 0 or 1 and store both the sum and an optional carry bit. Numbers higher than 1 can be represented by adding more adders that work at the same time. The number of simultaneously accessible binary digits is one measure of the “bitness” of a chip. An 8-bit CPU like the 6502 processes numbers in 8-bit chunks.

Arithmetic and logic are a big part of what a CPU does. But humans need a way to tell it what to do. So every CPU has an instruction set, which is a list of all the ways it can move data in and out of memory, do math calculations, compare numbers, and jump to different parts of a program.

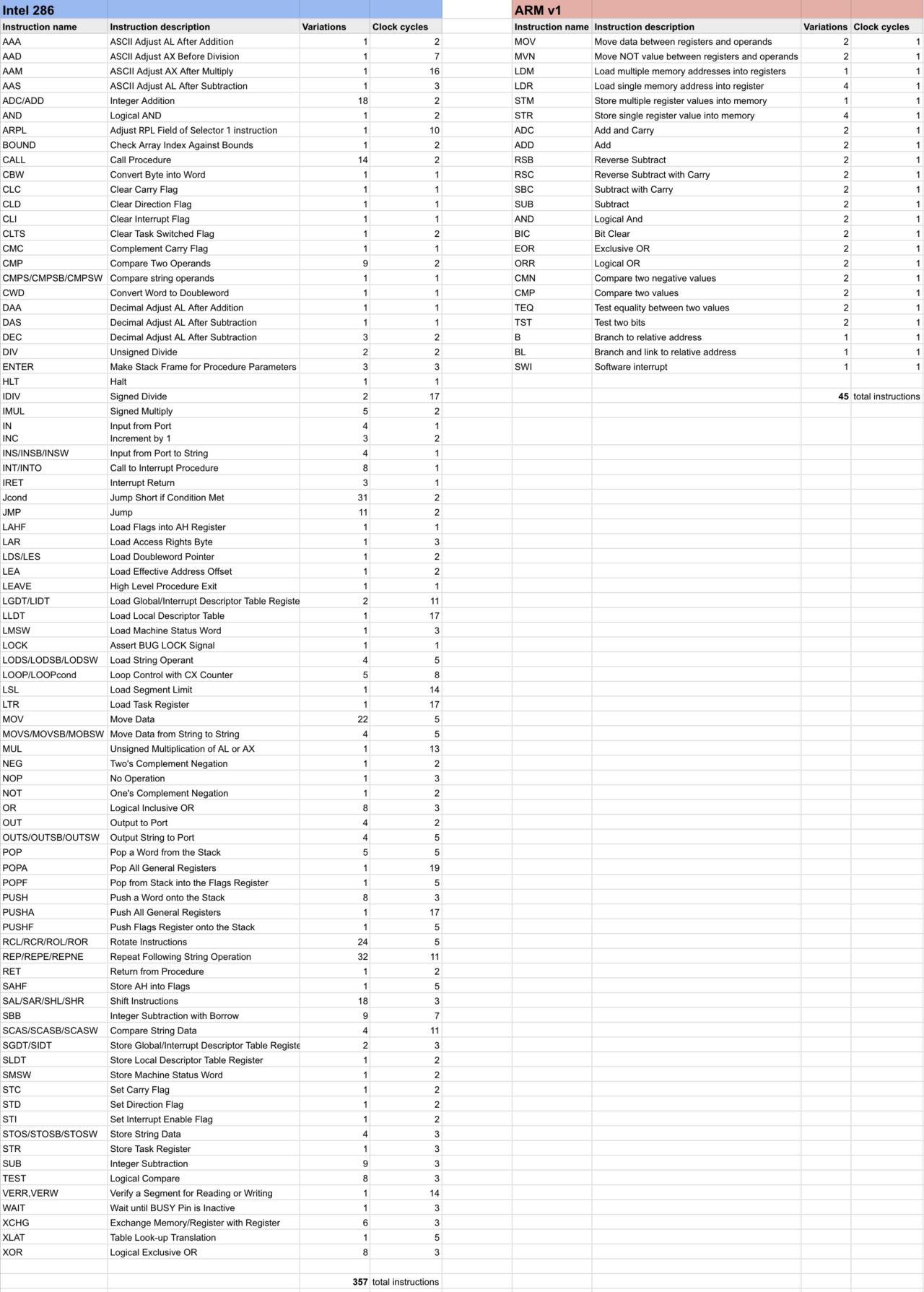

The RISC idea was to drastically reduce the number of instructions, which would simplify the internal design of the CPU. How drastically? The Intel 80286, a 16-bit chip, had a total of 357 unique instructions. The new RISC instruction set that Sophie Wilson was creating would have only 45.

To achieve this simplification, Wilson used a “load and store” architecture. Traditional (complex) CPUs had different instructions to add numbers from two internal “registers” (small chunks of memory inside the chip itself) or to add numbers from two addresses in external memory or for combinations of each. RISC chip instructions, in contrast, would only work on registers. Separate instructions would then move the answer from the registers to external memory.

This meant that programs for RISC CPUs typically took more instructions to produce the same result. So how could they be faster? One answer was that the simpler design could be run at a higher clock speed. But another reason was that more complex instructions took longer for a chip to execute. By keeping them simple, you could make every instruction execute in a single clock cycle. This made it easier to use something called pipelining.

Typically, a CPU has to process instructions in stages. It needs to fetch an instruction from memory, decode the instruction, and then execute the instruction. The RISC CPU that Acorn was designing would have a three-stage pipeline. While one part of the chip executed the current instruction, another part was fetching the next one, and so forth.

A disadvantage of the RISC design was that since programs required more instructions, they took up more space in memory. Back in the late 1970s, when the first generation of CPUs were being designed, 1 megabyte of memory cost about $5,000. So any way to reduce the memory size of programs (and having a complex instruction set would help do that) was valuable. This is why chips like the Intel 8080, 8088, and 80286 had so many instructions.

But memory prices were dropping rapidly. By 1994, that 1 megabyte would be under $6. So the extra memory required for a RISC CPU was going to be much less of a problem in the future.

To further future-proof the new Acorn CPU, the team decided to skip 16 bits and go straight to a 32-bit design. This actually made the chip simpler internally because you didn’t have to break up large numbers as often, and you could access all memory addresses directly. (In fact, the first chip only exposed 26 pins of its 32 address lines, since 2 to the power of 26, or 64MB, was a ridiculous amount of memory for the time.)

All the team needed now was a name for the new CPU. Various options were considered, but in the end, it was called the Acorn RISC Machine, or ARM.

On an ARM and a prayer

The development of the first ARM chip took 18 months. To save money, the team spent a lot of time testing the design before they put it into silicon. Furber wrote an emulator for the ARM CPU in interpreted BASIC on the BBC Micro. This was incredibly slow, of course, but it helped prove the concept and validate that Wilson’s instruction set would work as designed.

According to Wilson, the development process was ambitious but straightforward.

“We thought we were crazy,” she said. “We thought we wouldn’t be able to do it. But we kept finding that there was no actual stopping place. It was just a matter of doing the work.”

Furber did much of the layout and design of the chip itself, while Wilson concentrated on the instruction set. But in truth, the two jobs were deeply intertwined. Picking the code numbers for each instruction isn’t done arbitrarily. Each number is chosen so that when it is translated into binary digits, appropriate wires along the instruction bus activate the right decoding and routing circuits.

The testing process matured, and Wilson led a team that wrote a more advanced emulator. “With pure instruction simulators, we could have things that were running at hundreds of thousands of ARM instructions per second on a 6502 second processor,” she explained. “And we could write a very large amount of software, port BBC BASIC to the ARM and everything else, second processor, operating system. And this gave us increasing amounts of confidence. Some of this stuff was working better than anything else we’d ever seen, even though we were interpreting ARM machine code. ARM machine code itself was so high-performance that the result of interpreted ARM machine code was often better than compiled code on the same platform.”

These amazing results spurred the small team to finish the job. The design for the first ARM CPU was sent to be fabricated at VLSI Technology Inc., an American semiconductor manufacturing firm. The first version of the chip came back to Acorn on April 26, 1985. Wilson plugged it into the Tube slot on the BBC Micro, loaded up the ported-to-ARM version of BBC BASIC, and tested it with a special PRINT command. The chip replied, “Hello World, I am ARM,” and the team cracked open a bottle of champagne.

Let’s step back for a moment and reflect on what an amazing accomplishment this was. The entire ARM design team consisted of Sophie Wilson, Steve Furber, a couple of additional chip designers, and a four-person team writing testing and verification software. This new 32-bit CPU based on an advanced RISC design was created by fewer than 10 people, and it worked correctly the first time. In contrast, National Semiconductor was up to the 10th revision of the 32016 and was still finding bugs.

How did the Acorn team do this? They designed ARM to be as simple as possible. The V1 chip had only 27,000 transistors (the 80286 had 134,000!) and was fabricated on a 3-micrometer process—that’s 3,000 nanometers, or about a thousand times less granular than today’s CPUs.

At this level of detail, you can almost make out the individual transistors. Look at the register file, for example, and compare it to this interactive block diagram on how random access memory works. You can see the instruction bus carrying data from the input pins and routing it around to the decoders and to the register controls.

As impressive as the first ARM CPU was, it’s important to point out the things it was missing. It had no onboard cache memory. It didn’t have multiplication or division circuits. It also lacked a floating point unit, so operations with non-whole numbers were slower than they could be. However, the use of a simple barrel shifter helped with floating point numbers. The chip ran at a very modest 6 MHz.

And how well did this plucky little ARM V1 perform? In benchmarks, it was found to be roughly 10 times faster than an Intel 80286 at the same clock speed and equivalent to a 32-bit Motorola 68020 running at 17 MHz.

The ARM chip was also designed to run at very low power. Wilson explained that this was entirely a cost-saving measure—the team wanted to use a plastic case for the chip instead of a ceramic one, so they set a maximum target of 1 watt of power usage.

But the tools they had for estimating power were primitive. To make sure they didn’t go over the limit and melt the plastic, they were very conservative with every design detail. Because of the simplicity of the design and the low clock rate, the actual power draw ended up at 0.1 watts.

In fact, one of the first test boards the team plugged the ARM into had a broken connection and was not attached to any power at all. It was a big surprise when they found the fault because the CPU had been working the whole time. It had turned on just from electrical leakage coming from the support chips.

The incredibly low power draw of the ARM chip was a “complete accident,” according to Wilson, but it would become important later.

ARMing a new computer

So Acorn had this amazing piece of technology, years ahead of its competitors. Surely financial success was soon to follow, right? Well, if you follow computer history, you can probably guess the answer.

By 1985, sales of the BBC Micro were starting to dry up, squeezed by cheap Sinclair Spectrums on one side and IBM PC clones on the other. Acorn sold a controlling interest in its company to Olivetti, with whom it had previously partnered to make a printer for the BBC Micro. In general, if you’re selling your computer firm to a typewriter company, that’s not a good sign.

Acorn sold a development board with the ARM chip to researchers and hobbyists, but it was limited to the market of existing BBC Micro owners. What the company needed was a brand new computer to really showcase the power of this new CPU.

Before it could do this, it needed to upgrade the original ARM just a bit. The ARM V2 came out in 1986 and added support for coprocessors (such as a floating point coprocessor, which was a popular add-on for computers back then) and built-in hardware multiplication circuits. It was fabricated on a 2 micrometer process, which meant that Acorn could boost the clock rate to 8 MHz without consuming any more power.

But a CPU alone wasn’t enough to build a complete computer. So the team built a graphics controller chip, an input/output controller, and a memory controller. By 1987, all four chips, including the ARM V2, were ready, along with a prototype computer to put them in. To reflect its advanced thinking capabilities, the company named it the Acorn Archimedes.

Given that it was 1987, personal computers were now expected to come equipped with more than just a prompt to type in BASIC instructions. Users demanded pretty graphical user interfaces like those on the Amiga, the Atari ST, and the Macintosh.

Acorn had set up a remote software development team in Palo Alto, California, home of Xerox PARC, to design a next-generation operating system for the Archimedes. It was called ARX, and it promised preemptive multitasking and multiple user support. ARX was slow, but the bigger problem was that it was late. Very late.

The Acorn Archimedes was getting ready to ship, and the company didn’t have an operating system to run on it. This was a crisis situation. So Acorn management went to talk to Paul Fellows, the head of the Acornsoft team who had written a bunch of languages for the BBC Micro. They asked him, “Can you and your team write and ship an operating system for the Archimedes in five months?”

According to Fellows, “I was the fool who said yes, we can do it.”

Five months is not a lot of time to make an operating system from scratch. The quick-and-dirty OS was called “Project Arthur,” possibly after the famous British computer scientist Arthur Norman, but also possibly a shortening of “ARm by THURsday!” It started as an extension of BBC BASIC. Richard Manby wrote a program called “Arthur Desktop” in BASIC, merely as a demonstration of what you could do with the window manager the team had developed. But they were out of time, so the demo was burned into the read-only memory (ROM) of the first batch of computers.

The first Archimedes models shipped in June of 1987, some of them still sporting the BBC branding. The computers were definitely fast, and they were a good deal for the money—the introductory price was 800 pounds, which at the time would have been about $1,300. This compared favorably to a Macintosh II, which cost $5,500 in 1987 and had similar computing power.

But the Macintosh had PageMaker, Microsoft Word, and Excel, along with tons of other useful software. The Archimedes was a new computer platform, and at its release, there wasn’t much software available. The computing world was rapidly converging on IBM PC compatibles and Macintoshes (and for a few more years, Amigas), and everyone else found themselves getting squeezed out. The Archimedes computers got good reviews in the UK press and gained a passionate fan base, but fewer than 100,000 systems were sold over the first couple of years.

The seed grows

Acorn moved quickly to fix bugs in Arthur and work on a replacement operating system, RISC OS, that had more modern features. RISC OS shipped in 1989, and a new revision of the ARM CPU, V3, followed soon afterward.

The V3 chip was built on a 1.5-micrometer process, which shrunk the size of its ARM2 core to approximately one-quarter of the available die space. This left room to include 4 kilobytes of fast level-1 cache memory. The clock speed was also increased to 25 MHz.

While these improvements were impressive, engineers like Sophie Wilson believed the ARM chip could be pushed even further. But there were limits to what could be done with Acorn’s rapidly dwindling resources. To realize these dreams, the ARM team needed to look for an outside investor.

And that’s when a representative from another computer company, named after a popular fruit, walked in the door. source